Gravitational memory and spacetime symmetries

Gravitational memory and spacetime symmetries

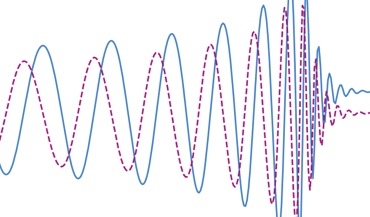

Symmetries of spacetime infinitely far away from gravitational fields may hint at new laws of nature

Setting up an account

Installing modules

Running Jupyter notebook

Disk space quotas

Jobs and parallelization

In order to use OzStar one needs:

This can be done here.

Some errors during further steps can be related to user not being in a project.

The work on OzStar machine is carried out remotely from a computer terminal program (MacOS) or a command line (Unix/Windows) using ssh-connection. There are two tips for how to simplify your everyday connection to OzStar:

ssh [email protected] it is convenient just to type ozstar. This can be done by adding a linealias ozstar='ssh [email protected]'ssh-add ~/.ssh/ozstar_keyBy default there are almost none modules installed on OzStar for new accounts.

See loaded modules with module list

See available for installation modules with module spider

To install modules:

.modulelist in your home directory, when ssh-connected to OzStarwhile read in; do module load "$in"; done < .modulelistIf some modules are not working after the installation (i.e. python3 in my case), I recommend adding them in the file .modulelist one-by-one. It turned out that python3 requires gcc installed, while I have not seen this message when I tried to install all my packages at once.

In case there is a problem with that or something related to "module spider", it can be fixed by deleting cache: rm /home/bgonchar/.lmod.d/.cache/*.

There is no trivial way to open a web browser and start using Jupyter notebook on OzStar.

We need to (1) set it all up, (2) run some commands.

We also need to accomplish the previous step "Installing modules on OzStar"

Step I. Setting up Jupyter notebook

1. In your home directory on OzStar create the file jupyter.sh with the following content (no need to change anything):

#!/bin/bash

#SBATCH --nodes 1

#SBATCH --time 4:00:00

#SBATCH --job-name jupyter-test

#SBATCH --output jupyter-log-%J.txt

## get tunneling info

XDG_RUNTIME_DIR=""

ipnport=$(shuf -i8000-9999 -n1)

ipnip=$(hostname -i)

## print tunneling instructions to jupyter-log-{jobid}.txt

echo -e "

Copy/Paste this in your local terminal to ssh tunnel with remote

-----------------------------------------------------------------

ssh -N -L $ipnport:$ipnip:$ipnport user@host

-----------------------------------------------------------------

Then open a browser on your local machine to the following address

------------------------------------------------------------------

localhost:$ipnport (prefix w/ https:// if using password)

------------------------------------------------------------------

"

module load gcc/6.4.0

module load python/3.6.4

. ~/jupyter/bin/activate

## start an ipcluster instance and launch jupyter server

jupyter-notebook --no-browser --port=$ipnport --ip=$ipnip

2. In your home directory on OzStar run following commands:

virtualenv ~/jupyter

. jupyter/bin/activate

pip install --user jupyter

Step II. Running Jupyter notebook

1. In your home directory on OzStar run sbatch jupyter.sh

The output will give you the job number and produce a file jupyter-log-{whatever your job number is here}.txt.

2. In your home directory on OzStar run cat jupyter-log-{whatever your job number is here}.txt

The output will give you an IP address, and a port (4 digits).

3. On your local machine run ssh -N -L port:IP:port [email protected]

4. In the web browser go to address: http://localhost:port

5. When token is requested in the web browser, copy a token from a terminal output.

Instructions are adapted from Paul Easter and Ipyrad API - HPC tunnel.

Python notebooks after above instructions will only access default modules. In order to make other modules available, one needs to:

Home directories on OzStar have a limit of 10 Gb of memory per user. For more memory, project directories have to be used. To see the available memory resources, type quota. There is also a limit on a number of files, which is denoted as "inode usage" in the quota status. Sometimes a lot of small files can prevent one from writing to disk, even if there is still enough space. To solve this, one has to find and remove them. To find where most of the small files are located, the following command can be used:

find . -type f | sed -e 's%^\(\./[^/]*/\).*$%\1%' -e 's%^\.\/[^/]*$%./%' | sort | uniq -c

Out-of-the-box, some MPI codes that split the calculation between multiple processors also start multi-threading, trying to run several commands in parallel in each of the processors. This can cause calculation to slow down, yielding a message on the OzStar monitor: "job spends a significant time in sys calls". To avoid this, one can hard-code the usage of one thread per core in slurm submission scripts with the following command: export OMP_NUM_THREADS=1.

Gravitational memory and spacetime symmetries

Gravitational memory and spacetime symmetries

Symmetries of spacetime infinitely far away from gravitational fields may hint at new laws of nature

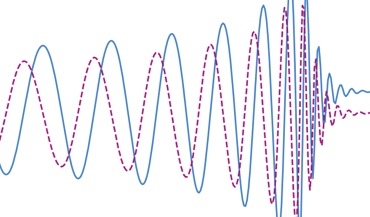

The nanohertz gravitational wave background

The nanohertz gravitational wave background

Is the common-spectrum process observed with pulsar timing arrays a precursor to the detection?